Preface and Acknowledgments

I finished writing Energy in World History in July 1993; the book came out in 1994, and it remained in print for two decades. Since 1994 energy studies have been through a period of great expansion, and I have added to it by publishing nine books dealing explicitly with energy matters and a dozen interdisciplinary books with significant energy components. Consequently, once I decided to revisit this fascinating topic it was obvious that superficial updating would not do. As a result, this is a substantially new book with a new title: the text is nearly 60% longer than the original, and there are 40% more images and more than twice as many references. Boxes sprinkled throughout the book contain some surprising calculations, as well as many detailed explanations of important topics and essential tables. I have also quoted from sources ranging from the Classics—Apuleius, Lucretius, Plutarch—to nineteenth- and twentieth-century observers such as Braudel, Eden, Orwell, and Senancour. Graphics were updated and created by Bounce Design in Winnipeg; two dozen archival photographs were secured from Corbis in Seattle by Ian Saunders and Anu Horsman. As is always the case with interdisciplinary studies of this kind, this book would not have been possible without the work of hundreds of historians, scientists, engineers, and economists.

Winnipeg, August 2016

1 Energy and Society

Energy is the only universal currency: one of its many forms must be transformed to get anything done. Universal manifestations of these transformations range from the enormous rotations of galaxies to thermonuclear reactions in stars. On Earth they range from the terra-forming forces of plate tectonics that part ocean floors and raise new mountain ranges to the cumulative erosive impacts of tiny raindrops (as the Romans knew, gutta cavat lapidem non vi, sed saepe cadendo—A drop of water hollows a stone not by force but by continually dripping). Life on Earth—despite decades of attempts to catch a meaningful extraterrestrial signal, still the only life in the universe we know of—would be impossible without the photosynthetic conversion of solar energy into phytomass (plant biomass). Humans depend on this transformation for their survival, and on many more energy flows for their civilized existence. As Richard Adams (1982, 27) put it,

We can think thoughts wildly, but if we do not have the wherewithal to convert them into action, they will remain thoughts. … History acts in unpredictable ways. Events in history, however, necessarily take on a structure or organization that must accord with their energetic components.

The evolution of human societies has resulted in larger populations, a growing complexity of social and productive arrangements, and a higher quality of life for a growing number of people. From a fundamental biophysical perspective, both prehistoric human evolution and the course of history can be seen as the quest for controlling greater stores and flows of more concentrated and more versatile forms of energy and converting them, in more affordable ways at lower costs and with higher efficiencies, into heat, light, and motion. This tendency has been generalized by Alfred Lotka (1880–1949), an American mathematician, chemist, and statistician, in his law of maximum energy: “In every instance considered, natural selection will so operate as to increase the total mass of the organic system, to increase the rate of circulation of matter through the system, and to increase the total energy flux through the system so long as there is present an unutilized residue of matter and available energy” (Lotka 1922, 148).

The history of successive civilizations, the largest and most complex organisms in the biosphere, has followed this course. Human dependence on ever higher energy flows can be seen as an inevitable continuation of organismic evolution. Wilhelm Ostwald (1853–1932, recipient of the 1909 Nobel Prize in Chemistry for his work on catalysis) was the first scientist to expand explicitly “the second law of energetics to all and any action and in particular to the totality of human actions. … All energies are not ready for this transformation, only certain forms which have been therefore given the name of the free energies. … Free energy is therefore the capital consumed by all creatures of all kinds and by its conversion everything is done” (Ostwald 1912, 83). This led him to formulate his energetic imperative: “Vergeude keine Energie, verwerte sie”—“Do not waste any energy, make it useful” (Ostwald 1912, 85).

Three quotations illustrate how Ostwald’s followers have been restating his conclusions and how some of them have made the link between energy and all human affairs even more deterministically explicit. In the early 1970s Howard Odum (1924–2002) offered a variation on Ostwald’s key theme: “The availability of power sources determines the amount of work activity that can exist, and control of these power flows determines the power in man’s affairs and in his relative influence on nature” (Odum 1971, 43). In the late 1980s Ronald Fox concluded a book on energy in evolution by writing that “a refinement in cultural mechanisms has occurred with every refinement of energy flux coupling” (Fox 1988, 166).

One does not have to be a scientist to make the link between energy supply and social advances. This is Eric Blair (George Orwell, 1903–1950), writing in 1937 in the second chapter of The Road to Wigan Pier, after his visit to an underground coal mine:

Our civilization, pace Chesterton, is founded on coal, more completely than one realizes until one stops to think about it. The machines that keep us alive, and the machines that make machines, are all directly or indirectly dependent upon coal. In the metabolism of the Western world the coal-miner is second in importance only to the man who ploughs the soil. He is a sort of caryatid upon whose shoulders nearly everything that is not grimy is supported. For this reason the actual process by which coal is extracted is well worth watching, if you get the chance and are willing to take the trouble. (Orwell 1937, 18)

But restating that fundamental link (as Orwell did) and claiming that cultural refinements have taken place with every refinement of energy flux (as Fox does) are two different things. Orwell’s conclusion is unexceptionable. Fox’s phrasing is clearly a restatement of a deterministic view expressed two generations earlier by the anthropologist Leslie White (1900–1975), who called it the first important law of cultural development: “Other things being equal, the degree of cultural development varies directly as the amount of energy per capita per year harnessed and put to work” (White 1943, 346). While there can be no dispute either about Ostwald’s fundamental formulation or about energy’s all-encompassing effect on the structure and dynamics of evolving societies (pace Orwell), a deterministic linking of the level of energy use with cultural achievements is a highly arguable proposition. I examine that causality (or the lack of it) in the book’s closing chapter.

The fundamental nature of the concept is not in doubt. As Robert Lindsay (1975, 2) put it,

If we can find a single word to represent an idea which applies to every element in our existence in a way that makes us feel we have a genuine grasp of it, we have achieved something economical and powerful. This is what has happened with the idea expressed by the word energy. No other concept has so unified our understanding of experience.

But what is energy? Surprisingly, even Nobel Prize winners have great difficulty in giving a satisfactory answer to that seemingly simple question. In his famous Lectures on Physics, Richard Feynman (1918–1988) stressed that “it is important to realize that in physics today, we have no knowledge of what energy is. We do not have a picture that energy comes in little blobs of a definite amount” (Feynman 1988, 4–2).

What we do know is that all matter is energy at rest, that energy manifests itself in a multitude of ways, and that these distinct energy forms are linked by numerous conversions, many of them universal, ubiquitous, and incessant, others highly localized, infrequent, and ephemeral ([]{#c10752_001.xhtml#fig_001a}fig. 1.1). The understanding of these stores, potentials, and transformations was rapidly expanded and systematized mostly during the nineteenth century, and this knowledge was perfected during the twentieth century when—a telling comment on the complexities of energy transformations—we understood how to release nuclear energy sooner (theoretically by the late 1930s, practically by 1943, when the first reactor began to operate) than we knew how photosynthesis works (its sequences were unraveled only during the 1950s).

![[]{#c10752_001.xhtml#fig_001}[[Figure 1.1](#c10752_001.xhtml#fig_001a)]{.figureLabel} Matrix of energy conversions. Where more possibilities exist, no more than two leading transformations are identified.](https://raw.githubusercontent.com/hdgroup/picasso/main/2023/energy-and-civilization/10752_001_fig_001.jpg)

Flows, Stores, and Controls

All known forms of energy are critical for human existence, a reality precluding any rank ordering of their importance. Much in the course of history has been determined and circumscribed by both universal and planetary flows of energy and by their regional or local manifestations. The fundamental features of the universe are governed by gravitational energy, which orders countless galaxies and star systems. Gravity also keeps our planet orbiting at just the right distance from the Sun, and it holds a sufficiently massive atmosphere that makes Earth habitable (box 1.1).

Box 1.1 Gravity and the habitability of Earth

Extreme tolerances of carbon-based metabolism are determined by the freezing point of water, whose liquid form is required for the formation and reactions of organic molecules (the lower bound), and by temperatures and pressures that destabilize amino acids and break down proteins (the upper bound). Earth’s continuously habitable zone—the range of orbital radius ensuring optimal conditions for a life-supporting planet—is very narrow (Perkins 2013). A recent calculation concluded that we are even closer to the limit than previously thought: Kopparapu and co-workers (2014) concluded that, given its atmospheric composition and pressure, Earth orbits at the inner edge of the habitable zone, just outside the radius where the runaway greenhouse effect would bring intolerably high temperatures.

About two billion years ago enough carbon dioxide (CO~2~) was sequestered by the ocean and by archaea and algae to prevent this effect on Earth, but if the planet had been a mere 1% farther from the Sun, virtually all of its water would have been locked in glaciers. And even with temperatures within an optimum band the planet could not support highly diversified life without its unique atmosphere, dominated by nitrogen, enriched by oxygen from photosynthesis, and containing a number of important trace gases regulating surface temperature—but this thin gaseous envelope could not persist without the planet being sufficiently large to exert enough gravity to hold the atmosphere in place.

As with all active stars, fusion powers the Sun, and the product of those thermonuclear reactions reaches Earth as electromagnetic (solar, radiant) energy. Its flux ranges over a broad spectrum of wavelengths, including visible light. About 30% of this enormous flow is reflected by clouds and surfaces, about 20% is absorbed by the atmosphere and clouds, and the remainder, roughly half of the total inflow, is absorbed by oceans and continents, gets converted to thermal energy, and is reradiated into space (Smil 2008a). The geothermal energy of Earth adds up to a much smaller heat flux: it results from the original gravitational accretion of the planetary mass and from the decay of radioactive matter, and it drives grand tectonic processes, which keep reordering oceans and continents and cause volcanic eruptions and earthquakes.

Only a tiny part of the incoming radiant energy, less than 0.05%, is transformed by photosynthesis into new stores of chemical energy in plants, providing the irreplaceable foundation for all higher life. Animate metabolism reorganizes nutrients into growing tissues and maintains bodily functions and constant temperature in all higher species. Digestion also generates the mechanical (kinetic) energy of working muscles. In their energy conversions, animals are inherently limited by the size of their bodies and by the availability of accessible nutrition. A fundamental distinguishing characteristic of our species has been the extension of these physical limits through a more efficient use of muscles and through the harnessing of energies outside our own bodies.

Unlocked by human intellect, these extrasomatic energies have been used for a growing variety of tasks, both as more powerful prime movers and as fuels whose combustion releases heat. The triggers of energy supplies depend on the flow of information and on an enormous variety of artifacts. These devices have ranged from such simple tools as hammerstones and levers to complex fuel-burning engines and reactors releasing the energy of nuclear fission. The basic evolutionary and historical sequence of these advances is easy to outline in broad qualitative terms. As with any nonphotosynthesizing organism, the most fundamental human energy need is for food. Foraging and scavenging by hominins were very similar to the food acquisition practices of their primate ancestors. Although some primates—as well as a few other mammals (including otters and elephants), some birds (ravens and parrots), and even some invertebrates (cephalopods)—have evolved a small repertory of rudimentary tool-using capabilities (Hansell 2005; Sanz, Call, and Boesch 2014; []{#c10752_001.xhtml#fig_002a}fig. 1.2), only hominins made toolmaking a distinguishing mark of their behavior.

![[]{#c10752_001.xhtml#fig_002}[[Figure 1.2](#c10752_001.xhtml#fig_002a)]{.figureLabel} Chimpanzee (*Pan troglodytes*) in Gabon using tools to crack nuts (Corbis).](https://raw.githubusercontent.com/hdgroup/picasso/main/2023/energy-and-civilization/10752_001_fig_002.jpg)

Tools have given us a mechanical advantage in the acquisition of food, shelter, and clothing. The mastery of fire greatly extended our range of habitation and set us further apart from animals. New tools led to the harnessing of domesticated animals, the building of more complex muscle-powered machines, and the conversion of a tiny fraction of the kinetic energies of wind and water to useful power. These new prime movers multiplied the power under human command, but for a very long time their use was circumscribed by the nature and magnitude of the captured flows. Most obviously, this was the case with sails, ancient and effective tools whose capabilities were restricted for millennia by prevailing wind flows and persistent ocean currents. These grand flows steered the late fifteenth-century European transatlantic voyages to the Caribbean. They also prevented the Spaniards from discovering Hawaii, even though Spanish trading ships, the Manila Galleons (Galeón de Manila), sailed once or twice a year across the Pacific from Mexico (Acapulco) to the Philippines for 250 years between 1565 and 1815 (Schurz 1939).

Controlled combustion in fireplaces, stoves, and furnaces turned the chemical energy of plants into thermal energy. This heat has been used directly in households and in smelting metals, firing bricks, and processing and finishing countless products. The combustion of fossil fuels made all of these traditional direct uses of heat more widespread and more efficient. A number of fundamental inventions made it possible to convert thermal energy from the burning of fossil fuels to mechanical energy. This was done first in steam and internal combustion engines, then in gas turbines and rockets. We have been generating electricity by burning fossil fuels, as well by harnessing the kinetic energy of water, since 1882, and by fissioning a uranium isotope since 1956.

The combustion of fossil fuels and the generation of electricity created a new form of high-energy civilization whose expansion has now encompassed the whole planet and whose primary energy sources now include small but rapidly rising shares of new renewable sources, especially solar (harnessed by photovoltaic devices or in concentrating solar power plants) and wind (converted by large wind turbines). In turn, these advances have been predicated on a concatenation of other developments. To use a flow-model analogy, a combination of gates (valves) had to be set up and activated in proper sequence to enable the flow of human ingenuity.

The most notable gates required to release great energy potentials include requisite educational opportunities, predictable legal arrangements, transparent economic rules, the adequate availability of capital, and conditions conducive to basic research. Not surprisingly, it usually takes generations to allow much increased or qualitatively improved energy flows or to harness entirely new sources of energy on a significant scale. Timing, overall power, and the composition of the resulting energy flows are exceedingly difficult to predict, and during the earliest phases of such transitions it is impossible to appraise all eventual impacts that changing prime movers and fuel bases will have on farming, industries, transport, settlements, warfare, and Earth’s environment. Quantitative accounts are essential to appreciate the constraints of our actions and the extent of our achievements, and they require knowledge of basic scientific concepts and measures.

Concepts and Measures

Several first principles underlie all energy conversions. Every form of energy can be turned into heat, or thermal energy. No energy is ever lost in any of these conversions. Conservation of energy, the first law of thermodynamics, is one of the most fundamental universal realities. But as we move along conversion chains, the potential for useful work steadily diminishes (box 1.2). This inexorable reality defines the second law of thermodynamics, and entropy is the measure associated with this loss of useful energy. While the energy content of the universe is constant, conversions of energies increase its entropy (decrease its utility). A basketful of grain or a barrelful of crude oil is a low-entropy store of energy, capable of much useful work once metabolized or burned, and it ends up as the random motion of slightly heated air molecules, an irreversible high-entropy state that represents an irretrievable loss of utility.

Box 1.2 Diminishing utility of converted energy

Any energy conversion illustrates the principle. If an American reader uses electric light to illuminate this page, the electromagnetic energy of that light is only a small part of the chemical energy contained in the lump of coal used to generate it (in 2015 coal was used to produce 33% of the electricity generated in the United States). At least 60% of coal’s energy was lost as heat through a plant chimney and in cooling water, and if a reader uses an old incandescent light, then more than 95% of delivered electricity ends up as heat generated as the metal of the bulb’s coiled filament resists the electric current. The light reaching the page either is absorbed by it or is reflected and absorbed by its surroundings and reradiated as heat. The initial low-entropy input of coal’s chemical energy has been dissipated as diffused high-entropy heat that warmed the air above the station, along the wires, and around the light bulb and caused an imperceptible temperature increase above a page. No energy has been lost, but a highly useful form was degraded to the point of no practical utility.

This unidirectional entropic dissipation leads to a loss of complexity and to greater disorder and homogeneity in any closed system. But all living organisms, whether the smallest bacteria or a global civilization, temporarily defy this trend by importing and metabolizing energy. This means that every living organism must be an open system, maintaining a continuous inflow and outflow of energy and matter. As long as they are alive, these systems cannot be in a state of chemical and thermodynamic equilibrium (Prigogine 1947, 1961; von Bertalanffy 1968; Haynie 2001). Their negentropy—their growth, renewal, and evolution—results in greater heterogeneity and increasing structural and systemic complexity. As with so many other scientific advances, a coherent understanding of these realities came only during the nineteenth century, when the rapidly evolving disciplines of physics, chemistry, and biology found a common concern in studying transformations of energy (Atwater and Langworthy 1897; Cardwell 1971; Lindsay 1975; Müller 2007; Oliveira 2014; Varvoglis 2014).

These fundamental interests needed a codification of standard measurements. Two units became common for measuring energy: calorie, a metric unit, and the British thermal unit (Btu). Today’s basic scientific unit of energy is the joule, named after an English physicist, James Prescott Joule (1818–1889), who published the first accurate calculation of the equivalence of work and heat (box 1.3). Power denotes the rate of energy flow. Its first standard unit, horsepower, was set by James Watt (1736–1819). He wanted to charge for his steam engines on a readily understandable basis, and so he chose the obvious comparison with the prime mover they were to replace, a harnessed horse commonly used to power a mill or a pump ([]{#c10752_001.xhtml#fig_003a}fig. 1.3, box 1.3).

Box 1.3 Measuring energy and power

The official definition of a joule is the work accomplished when a force of one newton acts over a distance of one meter. Another option is to define a basic energy unit through heat requirements. One calorie is the amount of heat needed to raise the temperature of 1 cm^3^ of water by 1°C. That is a tiny amount of energy: to do the same for 1 kg of water calls for a thousand times more energy, or one kilocalorie (for the complete list of multiplier prefixes, see “Basic Measures” in the Addenda). Given the equivalence of heat and work, all that is required to convert calories to joules is to remember that one calorie equals roughly 4.2 joules. The conversion is equally simple for the still common nonmetric English measure, the British thermal unit. One Btu contains roughly 1,000 J (1,055, to be exact). A good comparative yardstick is the average daily food need. For most moderately active adults it is 2–2.7 Mcal, or about 8–11 MJ, and 10 MJ could be supplied by eating 1 kg of whole wheat bread.

In 1782 James Watt calculated in his Blotting and Calculation Book that a mill horse works at a rate of 32,400 foot-pounds a minute—and the next year he rounded this to 33,000 foot-pounds (Dickinson 1939). He assumed an average walking speed of about 3 feet per second, but we do not know where he got his figure for an average pull of about 180 pounds. Some large animals were that powerful, but most horses in eighteenth-century Europe could not sustain the rate of one horsepower. Today’s standard unit of power, one watt, is equal to the flow of one joule per second. One horsepower is equal to about 750 watts (745.699, to be exact). Consuming 8 MJ of food a day corresponds to a power rate of 90 W (8 MJ/24 h × 3,600 s), less than the rating of a standard light bulb (100 W). A double toaster needs 1,000 W, or 1 kW; small cars deliver around 50 kW; a large coal-fired or nuclear power plant produces electricity at the rate of 2 GW.

![[]{#c10752_001.xhtml#fig_003}[[Figure 1.3](#c10752_001.xhtml#fig_003a)]{.figureLabel} Two horses turning a capstan geared to pumping well water in a mid-eighteenth-century French carpet manufactory (reproduced from the *Encyclopédie* [Diderot and d'Alembert 1769--1772]). An average horse of that period could not sustain a steady work rate of one horsepower. James Watt used an exaggerated rating to ensure customers' satisfaction with his horsepower-denominated steam engines installed to place harnessed animals.](https://raw.githubusercontent.com/hdgroup/picasso/main/2023/energy-and-civilization/10752_001_fig_003.jpg)

Another important rate is energy density, the amount of energy per unit mass of a resource (box 1.4). This value is of a critical importance for foodstuffs: even where abundant, low-energy-density foods could never become staples. For example, the pre-Hispanic inhabitants of the basin of Mexico always ate plenty of prickly pears, which were easy to gather from the many species of cacti belonging to the genus Opuntia (Sanders, Parsons, and Santley 1979). But, as with most fruits, pear’s pulp is overwhelmingly (about 88%) water, with less than 10% carbohydrates, 2% protein, and 0.5% lipids, and has an energy density of just 1.7 MJ/kg (Feugang et al. 2006). This means that even a small woman surviving only on the carbohydrates of cactus pears (assuming, unrealistically, virtually no need for the other two macronutrients) would have to eat 5 kg of the fruit every day—but she could get the same amount of energy from only about 650 g of ground corn consumed as tortillas or tamales.

Box 1.4 Energy densities of foodstuffs and fuels

<figure>

<figcaption>

</figcaption>

Ranking Examples Energy density (MJ/kg)

Foodstuffs

Very low Vegetables, fruits 0.8–2.5 Low Tubers, milk 2.5–5.0 Medium Meats 5.0–12.0 High Cereal and legume grains 12.0–15.0 Very high Oils, animal fats 25.0–35.0 Fuels

Very low Peats, green wood, grasses 5.0–10.0 Low Crop residues, air-dried wood 12.0–15.0 Medium Dry wood 17.0–21.0 Bituminous coals 18.0–25.0 High Charcoal, anthracite 28.0–32.0 Very high Crude oils 40.0–44.0

Sources: Specific energy densities for individual foodstuffs and fuels are listed in Merrill and Watt (1973), Jenkins (1993), and USDA (2011).

</figure>

Power density is the rate at which energies are produced or consumed per unit of area and hence is a critical structural determinant of energy systems (Smil 2015b). For example, city size in all traditional societies depended on fuelwood and charcoal, and it was clearly limited by the inherently low power density of phytomass production (box 1.5, []{#c10752_001.xhtml#fig_004a}fig. 1.4). The power density of sustainable annual tree growth in temperate climates is at best equal to 2% of the power density of energy consumption for traditional urban heating, cooking, and manufactures. Consequently, cities had to draw on nearby areas at least 30 times their size for fuel supply. This reality restricted their growth even where other resources, such as food and water, were adequate.

Box 1.5 Power densities of phytomass fuels

Photosynthesis converts less than 0.5% of incoming solar radiation into new phytomass. The best annual fuelwood productivities of traditional fast-growing species (poplars, eucalyptus, pines) were no more than 10 t/ha, and in drier regions the rates were between 5 and 10 t/ha (Smil 2015b). With the energy density of dry wood averaging 18 GJ/t, the harvest of 10 t/ha would translate into a power density of about 0.6 W/m^2^: (10 t/ha × 18 GJ)/3.15 × 107 (seconds in one year) = ~5,708 W; 5,708 W/10,000 m^2^ (ha) = ~0.6 W/m^2^. A large eighteenth-century city would have required at least 20–30 W/m^2^ of its built-up area for heating, cooking, and artisanal manufactures, and its fuelwood would have had to come from an area at least 30 and up to 50 times its size.

But cities required plenty of charcoal, the only preindustrial smokeless fuel preferred for indoor heating by all traditional civilizations, and charcoaling entailed further substantial energy loss. Even by the mid-eighteenth century the typical charcoal-to-wood ratio was still as high as 1:5, which means that in energy terms (with dry wood at 18 GJ/t and charcoal [virtually pure carbon] at 29 GJ/t), this conversion was only about 30% efficient (5 × 18/29 = 0.32), and the power density of wood harvests destined for charcoal production was only about 0.2 W/m^2^. Consequently, large preindustrial cities located in a northern temperate climate and relying heavily on charcoal (China’s Xi’an or Beijing would be good examples) would have required a wooded area at least 100 times their size to ensure a continuous supply of that fuel.

![[]{#c10752_001.xhtml#fig_004}[[Figure 1.4](#c10752_001.xhtml#fig_004a)]{.figureLabel} Charcoaling in early seventeenth-century England as depicted in John Evelyn's *Silva* (1607).](https://raw.githubusercontent.com/hdgroup/picasso/main/2023/energy-and-civilization/10752_001_fig_004.jpg)

Yet another rate, one that has assumed great importance with advancing industrialization, is the efficiency of energy conversions. This ratio of output/input describes the performance of energy converters, be they stoves, engines, or lights. While we cannot do anything about the entropic dissipation, we can improve the efficiency of conversions by lowering the amount of energy required to perform specific tasks (box 1.6). There are fundamental (thermodynamic, mechanical) constraints to these improvements, but we have pushed some processes close to the practical efficiency limits, though in most instances, including such common energy converters as internal combustion engines and lights, there is still much room for further improvement.

Box 1.6 Efficiency improvements and the Jevons paradox

Technical advances have brought many impressive efficiency gains, and the history of lighting offers one of the best examples (Nordhaus 1998; Fouquet and Pearson 2006). Candles convert just 0.01% of chemical energy in tallow or wax to light. Edison’s light bulbs of the 1880s were roughly ten times as efficient. By 1900, coal-fired electricity-generating plants had efficiencies of just 10%; light bulbs turned no more than 1% of electricity into light, and hence about 0.1% of coal’s chemical energy appeared as light (Smil 2005). The best combined-cycle gas turbine plants (using hot gas exiting a gas turbine to produce steam for a steam turbine) are now about 60% efficient, while fluorescent lights have efficiencies up to 15%, as do light-emitting diodes (USDOE 2013). This means that about 9% of energy in natural gas ends up as light, a 90-fold gain since the late 1880s. Such gains have saved capital and operation costs and lowered environmental impacts.

But in the past, the rise of conversion efficiency did not necessarily result in actual energy savings. In 1865 Stanley Jevons (1835–1882), an English economist, pointed out that the adoption of more efficient steam engines was accompanied by large increases in coal consumption and concluded, “It is wholly a confusion of ideas to suppose that the economical use of fuels is equivalent to a diminished consumption. The very contrary is the truth. As a rule, new modes of economy will lead to an increase of consumption according to a principle recognized in many parallel instances” (Jevons 1865, 140). This reality has been confirmed by many studies (Herring 2004, 2006; Polimeni et al. 2008), but in affluent countries, those whose high per capita energy use has approached, or already reached, saturation levels, the effect has been getting weaker. As a result, rebounds attributable to higher efficiency at the end-use level are often small and decrease over time, and specific economy-wide rebounds may be trivial, and even net positive (Goldstein, Martinez, and Roy 2011).

When efficiencies are calculated for the production of foodstuffs (energy in food/energy in inputs to grow it), fuels, or electricity they are usually called energy returns. Net energy returns in every traditional agriculture relying solely on animate power had to be considerably greater than one: edible harvests had to contain more energy than the amount consumed as food and feed needed not only by people and animals producing those crops but also by their nonworking dependents. An insurmountable problem arises when we try to compare energy returns in traditional agricultures that were powered solely by animate energies (and hence involved only transformations of recently received solar radiation) with those in modern farming, which is subsidized directly (fuel for field operations) and indirectly (the energies needed to synthesize fertilizers and pesticides and to build farm machinery) and hence has, invariably, lower energy returns than traditional cropping (box 1.7).

Box 1.7 Comparison of energy returns in food production

Since the early 1970s, energy ratios have been used to illustrate the superiority of traditional farming and the low energy returns of modern agriculture. Such comparisons are misleading owing to a fundamental difference between the two ratios. Those for traditional farming are simply quotients of the food energy harvested in crops and the food and feed energy needed to produce those harvests by deploying human and animal labor. In contrast, in modern farming the denominator is composed overwhelmingly of nonrenewable fossil fuel inputs needed to power field machinery and to make machines and farm chemicals; labor inputs are negligible.

If the ratios were calculated merely as quotients of edible energy output to labor input, then modern systems, with their miniscule amount of human effort and with no draft animals, would look superior to any traditional practice. If the cost of producing a modern crop included all converted fossil fuels and electricity converted to a common denominator, then the energy returns in modern agriculture would be substantially below traditional returns. Such a calculation is possible because of the physical equivalence of energies. Both food and fuels can be expressed in identical units, but an obvious “apples and oranges” problem remains: there is no satisfactory way to compare, simply and directly, the energy returns of the two farming systems that depend on two fundamentally different kinds of energy inputs.

Finally, energy intensity measures the cost of products, services, and even of aggregate economic output, in standard energy units—and of energy itself. Among the commonly used materials, aluminum and plastics are highly energy-intensive, while glass and paper are relatively cheap, and lumber (excluding its photosynthetic cost) is the least energy-intensive widely deployed material (box 1.8). The technical advances of the past two centuries have brought many substantial declines in energy intensities. Perhaps most notably, the coke-fueled smelting of pig iron in large blast furnaces now requires less than 10% of energy per unit mass of hot metal than did the preindustrial charcoal-based production of pig iron (Smil 2016).

Box 1.8 Energy intensities of common materials

<figure>

<figcaption>

</figcaption>

Material Energy cost (MJ/kg) Process

Aluminum 175–200 Metal from bauxite Bricks 1–2 Fired from clay Cement 2–5 From raw materials Copper 90–100 From ore Explosives 10–70 From raw materials Glass 4–10 From raw materials Gravel <1 Excavated Iron 12–20 From iron ore Lumber 1–3 From standing timber Paper 23–35 From standing timber Plastics 60–120 From hydrocarbons Plywood 3–7 From standing timber Sand <1 Excavated Steel 20–25 From pig iron Steel 10–12 From scrap metal Stone <1 Quarried

Source: Data from Smil (2014b).

</figure>

The energy cost of energy (often called EROI, energy return on investment, although EROEI, energy return on energy investment, would be more correct) is a revealing measure only if we compare values that have been calculated by identical methods using standard assumptions and clearly identified analytical boundaries. Modern high-energy societies have preferred to develop fossil fuel resources with the highest net energy returns, and that is a major reason why we have favored crude oil in general, and the rich Middle Eastern fields in particular; oil’s high energy density, and hence easy transportability, are other obvious advantages (box 1.9).

Box 1.9 Energy returns on energy investment

Differences in the quality and accessibility of fossil fuels are enormous: thin underground seams of low-quality coal versus a thick layer of good bituminous coal that can be extracted in open-cast mines, or supergiant Middle Eastern hydrocarbons fields versus low-productivity wells that require constant pumping. As a result, specific EROEI values differ substantially—and they can change with the development of more efficient recovery techniques. The following ranges are only approximate indicators, illustrating differences among leading extraction and conversion methods (Smil 2008a; Murphy and Hall 2010). For coal production they range between 10 and 80, while for oil and gas they have ranged from 10 to far above 100; for large wind turbines in the windiest locations they may approach 20 but are mostly less than 10; for photovoltaic solar cells they are no higher than 2; and for modern biofuels (ethanol, biodiesel) they are at best only 1.5, but their production has often entailed an energy loss or no net again (an EROEI of just 0.9–1.0).

Complexities and Caveats

Using standard units to measure energy storages and flows is physically straightforward and scientifically impeccable—yet these reductions to a common denominator are also misleading. Above all, they cannot capture critical qualitative differences among various energies. Two kinds of coal may have an identical energy density, but one may burn very cleanly and leave behind only a small amount of ash, while the other may smoke heavily, emit a great deal of sulfur dioxide, and leave a large incombustible residue. An abundance of high-energy-density coal ideal for fueling steam engines (the often used adjective “smokeless” must be seen in relative terms) was clearly a major factor contributing to the British dominance of nineteenth-century maritime transport, as neither France nor Germany had large coal resources of comparable quality.

Abstract energy units cannot differentiate between edible and inedible biomass. Identical masses of wheat and dry wheat straw contain virtually the same amount of heat energy, but straw, composed mostly of cellulose, hemicellulose, and lignin, cannot be digested by humans, while wheat (comprised of about 70% complex starchy carbohydrates and up to 14% protein) is an excellent source of basic nutrients. They also hide the specific origin of food energy, a matter of great importance for proper nutrition. Many high-energy foods contain no, or hardly any, protein and lipids, two nutrients required for normal body growth and maintenance, and they may not provide any essential micronutrients—vitamins and minerals.

There are other important qualities hidden by abstract measures. Access to energy stores is obviously a critical matter. Tree stem wood and branch wood have the same energy densities, but without good axes and saws, people in many preindustrial societies could only gather the latter fuel. That is still the norm in the poorest parts of Africa or Asia, where children and women gather woody phytomass; and its form, and hence its transportability, also matters because they have to carry wood (branch) loads home on their heads, often for considerable distances. Ease of use and conversion efficiency can be decisive in choosing a fuel. A house can be heated by wood, coal, fuel oil, or natural gas, but the best gas furnaces are now up to 97% efficient, hence far cheaper to operate than any other option.

Burning straw in simple stoves requires frequent stoking, while large wood pieces can be left burning unattended for hours. Unvented (or poorly vented, through a hole in the ceiling) indoor cooking with dry dung produces much more smoke than the burning of seasoned wood in a good stove, and indoor biomass combustion remains a major source of respiratory illnesses in many low-income countries (McGranahan and Murray 2003; Barnes 2014). And unless their origins are specified, densities or energy flows do not differentiate between renewable and fossil energies—yet this distinction is fundamental to understanding the nature and durability of a given energy system. Modern civilization has been created by the massive, and increasing, combustion of fossil fuels, but this practice is clearly limited by their crustal abundance, as well as by the environmental consequences of burning coals and hydrocarbons, and high-energy societies can ensure their survival only by an eventual transition to nonfossil sources.

Further difficulties arise when comparing the efficiencies of animate and inanimate energy conversions. In the latter case it is simply a ratio of fuel or electricity inputs and useful energy output, but in the former case daily food (or feed) intake should not be counted as an energy input of human or animal labor because most of that energy is required for basal metabolism—that is, to support the functioning of the body’s vital organs and to maintain steady body temperature—and basal metabolism operates regardless of whether people or animals rest or work. Calculating the net energy cost is perhaps the most satisfactory solution (box 1.10).

Box 1.10 Calculating the net energy cost of human labor

There is no universally accepted way to express the energy cost of human labor, and calculating the net energy cost is perhaps the best choice: it is a person’s energy consumption above the existential need that would have to be satisfied even if no work were done. This approach debits human labor with its actual incremental energy cost. Total energy expenditure is a product of basal (or resting) metabolic rate and physical activity level (TEE = BMR × PAL), and the incremental energy cost will obviously be the difference between TEE and BMR. The BMR of an adult man weighing 70 kg would be about 7.5 MJ/d, and for a 60 kg woman it would be about 5.5 MJ/day. If we assume that hard work will raise the daily energy requirement by about 30%, then the net energy cost would be about 2.2 MJ/day for men and 1.7 MJ/day for women, and hence I will use 2 MJ/day in all approximate calculations of net daily energy expenditures in foraging, traditional farming, and industrial work.

Daily food intake should not be counted as an energy input of labor: basal metabolism (to support vital organs, circulate the blood, and maintain a steady body temperature) operates regardless of whether we rest or work. Studies of muscle physiology, especially the work of Archibald V. Hill (1886–1977, recipient of the Nobel Prize in Physiology in 1922), made it possible to quantify the efficiency of muscular work (Hill 1922; Whipp and Wasserman 1969). The net efficiency of steady aerobic performances is about 20%, and this means that 2 MJ/day of metabolic energy attributable to a physical task would produce useful work equal to about 400 kJ/day. I will use this approximation in all relevant calculations. In contrast, Kander, Malanima, and Warde (2013) used total food intake rather than actual useful energy expenditure in their historical comparison of energy sources. They assumed an average annual food intake of 3.9 GJ/capita, unchanged between 1800 and 2008.

But even in much simpler societies than ours a great deal of labor was always mental rather than physical—deciding how to approach a task, how to execute it with the limited power available, how to lower energy expenditures—and the metabolic cost of thinking, even very hard thinking, is very small compared to strenuous muscular exertion. On the other hand, mental development requires years of language acquisition, socialization, and learning by mentoring and the accumulation of experience, and as societies progressed, this learning process became more demanding and longer lasting through formal schooling and training, services that have come to require considerable indirect energy inputs to support requisite physical infrastructures and human expertise.

A circle is closed. I have noted the necessity of quantitative evaluations, but the real understanding of energy in history requires much more than reducing everything to numerical accounts in joules and watts and treating them as all-encompassing explanations. I will approach the challenge in both ways: I will note energy and power requirements and densities and point out improving efficiencies, but I will not ignore the many qualitative attributes that constrain or promote specific energy uses. And while the imperatives of energy needs and uses have left a powerful imprint on history, many details, sequences, and consequences of these fundamental evolutionary determinants can be explained only by referring to human motivations and preferences, and by acknowledging those surprising, and often seemingly inexplicable, choices that have shaped our civilization’s history.

[]{#c10752_002.xhtml}

2 Energy in Prehistory

Understanding the origins of the genus Homo and filling in the details of its subsequent evolution is a never-ending quest as new findings push back many old markers and complicate the overall picture with the discovery of species that do not fit easily into an existing hierarchy (Trinkaus 2005; Reynolds and Gallagher 2012). In 2015 the oldest reliably dated hominin remains were those of Ardipithecus ramidus (4.4 million years ago, found in 1994) and Australopithecus anamensis (4.1–5.2 million years ago, found in 1967). A notable 2015 addition was Australopithecus deyiremeda (3.3–3.5 million years ago) from Ethiopia (Haile-Selassie et al. 2015). The sequence of younger hominins includes Australopithecus afarensis (unearthed in 1974 in Laetoli, Tanzania, and in Hadar, Ethiopia), Homo habilis (discovered in 1960 in Tanzania), and Homo erectus (beginning 1.8 million years ago, with many finds in Africa, Asia, and Europe extending to about 250,000 years ago).

Reanalysis of the first Homo sapiens bones—Richard Leakey’s famous discoveries in Ethiopia starting in 1967—dated them to about 190,000 years ago (McDougall, Brown, and Fleagle 2005). Our direct ancestors thus spent their lives as simple foragers, and it was only about 10,000 years ago that the first small populations of our species began a sedentary existence based on the domestication of plants and animals. This means that for millions of years, the foraging strategies of hominins resembled those of their primate ancestors, but we now have isotopic evidence from East Africa that by about 3.5 million years ago hominin diets began to diverge from those of extant apes. Sponheimer and co-workers (2013) showed that after that time, several hominin taxa began to incorporate ^13^C-enriched foods (produced by C~4~ or crassulacean acid metabolism) in their diets and had a highly variable carbon isotope composition atypical of African mammals. Reliance on C~4~ plants is thus of ancient origin, and in modern agriculture two C~4~ cultivars, corn and sugar cane, have higher average yields than any other species grown for its grain or sugar content.

The first evolutionary departure that eventually led to our species was not a larger brain size or toolmaking but bipedalism, a structurally improbable yet immensely consequential adaptation whose beginnings can be traced as far back as about seven million years ago (Johanson 2006). Humans are the only mammals whose normal way of locomotion is walking upright (other primates do so only occasionally), and hence bipedalism can be seen as the critical breakthrough adaptation that made us eventually human. Yet bipedalism—essentially a sequence of arrested falls—is inherently unstable and clumsy: “Human walking is a risky business. Without split-second timing man would fall flat on his face; in fact, with each step he takes, he teeters on the edge of catastrophe” (Napier 1970, 165). And besides making us prone to musculoskeletal injuries, bipedalism also leads to age-related bone loss, osteopenia (lower than normal bone density), and osteoporosis (Latimer 2005).

Many answers have been offered to the obvious question of why, then, do it, and some of them, as Johanson (2006) summarily argues, appear quite unpersuasive. To appear taller in order to intimidate predators would have had no effect on wild dogs or cheetahs or hyenas, who are not intimidated by much larger mammalian species. To become upright just to look over tall grass would only have attracted predators; reaching for fruit on low-hanging branches could be done without surrendering rapid quadruped running; and the cooling of bodies could be achieved by resting in shade and foraging only during cooler mornings or evenings. Differences in overall energy expenditure may offer the best explanation (Lovejoy 1988). Hominins, much like other mammals, spend most of their energy in reproduction, feeding, and ensuring safety, and if bipedalism helped to do all of these, then it would have been adopted.

As Johanson (2006, 2) puts it, “Natural selection cannot create a behavior like bipedalism, but it can act to select the behavior once it has arisen.” Viewed in a narrower sense, it is not clear that bipedalism offered sufficient biomechanical advantage to promote its selection just on the basis of the energy cost of walking (Richmond et al. 2001), though Sockol, Raichlen, and Pontzer (2007), after measuring energy expenditure in walking chimpanzees and adult humans, found that human walking costs about 75% less energy than both quadrupedal and bipedal walking in chimpanzees. The difference owes to the biomechanical differences in anatomy and gait, and above all to the more extended hip and longer hind limb in humans.

Bipedalism started a cascade of enormous evolutionary adjustments (Kingdon 2003; Meldrum and Hilton 2004). Upright walking liberated hominin arms for carrying weapons and for taking food to group sites instead of consuming it on the spot. But bipedalism was necessary to trigger hand dexterity and tool use. Hashimoto and co-workers (2013) concluded that adaptations underlying tool use evolved independently of those required for human bipedalism because in both humans and monkeys, each finger is represented separately in the primary sensorimotor cortex, just as the fingers are physically separated in the hand. This creates the ability to use each digit independently in the complex manipulations required for tool use. But without bipedalism it would be impossible to use the trunk for leverage in accelerating the hand during toolmaking and tool use. Bipedalism also freed the mouth and teeth to develop a more complex call system as the prerequisite of language (Aiello 1996). These developments required larger brains whose energy cost eventually reached three times the level for chimpanzees, accounting for up to one-sixth of the total basal metabolic rate (Foley and Lee 1991; Lewin 2004). The average encephalization quotient (actual/expected brain mass for body weight) is 2–3.5 for primates and early hominins, while for the humans is a bit higher than 6. Three million years ago Australopithecus afarensis had a brain volume of less than 500 cm^3^; 1.5 million years ago the volume had doubled in Homo erectus, and then it increased by roughly 50% in Homo sapiens (Leonard, Snodgrass, and Robertson 2007).

A higher encephalization quotient was critical for the rise of social complexity (which raised the survival odds and set hominins apart from other mammals) and was closely related to changes in the quality of food consumed. The brain’s specific energy need is roughly 16 times that of skeletal muscles, and the human brain claims 20–25% of resting metabolic energy, compared to 8–10% in other primates and just 3–5% in other mammals (Holliday 1986; Leonard et al. 2003). The only way to accommodate large brain size while maintaining the overall metabolic rate (the human resting metabolism is no higher than that of other mammals of similar mass) was to reduce the mass of other metabolically expensive tissues. Aiello and Wheeler (1995) argued that reducing the size of the gastrointestinal tract was the best option because the gut mass (unlike the mass of hearts or kidneys) can vary substantially, depending on the diet.

Fish and Lockwood (2003), Leonard, Snodgrass, and Robertson (2007), and Hublin and Richards (2009) confirmed that diet quality and brain mass have a significantly positive correlation in primates, and better hominin diets, including meat, supported larger brains, whose high energy need was partly offset by a reduced gastrointestinal tract (Braun et al. 2010). While extant nonhuman primates have more than 45% of their gut mass in the colon and only 14–29% in the small intestine, in humans those shares are reversed, with more than 56% in the small intestine and only 17–25% in the colon, a clear indication of adaptation to high-quality, energy-dense foods (meat, nuts) that can be digested in the small intestine. Increased meat consumption also helps to explain human gains in body mass and height, as well as smaller jaws and teeth (McHenry and Coffing 2000; Aiello and Wells 2002). But a higher meat intake could not change the energy basis of evolving hominins: to secure any food they had to rely only on their muscles and on simple stratagems while gathering, scavenging, hunting, and fishing.

Tracing the genesis of the first wooden tools (sticks and clubs) is impossible as only those artifacts that were preserved in anoxic environments, most commonly in bogs, were able to survive for extended periods. Disintegration is not a problem with the hard stones used to fashion simple tools, and new findings have been pushing back the date of the earliest verifiable hominin stone tools. For several decades the consensus dated the earliest stone tools to about 2.5 million years ago. Cobble-based, these relatively small and simple Oldowan hammerstones (cores with an edge), choppers, and flakes made it much easier to butcher animals and to break their bones (de la Torre 2011). But the latest findings at the Lomekwi site in West Turkana, Kenya, pushed the date of the oldest known stone toolmaking to about 3.3 million years ago (Harmand et al. 2015).

About 1.5 million years ago hominins started to quarry larger flakes to make bifacial hand axes, picks, and cleavers of Acheulean (1.2–0.1 million years ago) style. The chipping of a single core yielded sharp cutting edges less than 20 cm long, and these practices produced a large variety of special handheld stone tools ([]{#c10752_002.xhtml#fig_001a}fig. 2.1). Wooden spears were essential for hunting larger animals. In 1948 a nearly complete spear found inside an elephant skeleton in Germany was dated to the last interglacial period (115,000–125,000 years ago), and in 1996 throwing spears found at a Schöningen open-cast lignite mine were dated to 400,000–380,000 years ago (Thieme 1997), and stone points were hafted to wooden spears beginning about 300,000 years ago.

![[]{#c10752_002.xhtml#fig_001}[[Figure 2.1](#c10752_002.xhtml#fig_001a)]{.figureLabel} Acheulean stone tools, first made by *Homo ergaster,* were formed by the removal of stone flakes to create specialized cutting blades (Corbis).](https://raw.githubusercontent.com/hdgroup/picasso/main/2023/energy-and-civilization/10752_002_fig_001.jpg)

But new discoveries in South Africa put the earliest date of making hafted multicomponent tools about 200,000 years earlier than previously reported: Wilkins and co-workers (2012) concluded that stone points from Kathu Pan, made about 500,000 years ago, functioned as spear tips. True long-range projectile weaponry evolved in Africa between 90,000 and 70,000 years ago (Rhodes and Churchill 2009). Another recent South African discovery showed that a significant technical advance—the production of small bladelets (microliths), primarily from heat-treated stone, to be used in making composite tools—took place as early as 71,000 years ago (Brown et al. 2012). Larger composite tools became common only about 25,000 years ago (Europe’s Gravettian period) with the production of ground and hafted adzes and axes, and with the more efficient flaking of flint, yielding many sharp-edged tools; harpoons, needles, saws, pottery, and items from woven fibers (clothes, nets, baskets) were also invented and adopted during that time.

Magdalenian techniques (between 17,000 and 12,000 years ago; the era is named after a rock shelter at La Madeleine in southern France where the tools were discovered) produced up to 12 m of microblade edges from a single stone, and experiments with their modern replicas (mounted on spears) show their hunting efficacy (Pétillon et al. 2011). A stone-tipped spear became an even more potent weapon after the invention of spear throwers during the late Paleolithic. A leveraged throw easily doubled the velocity of the weapon and reduced the necessity for a closer approach. Stone-tipped arrows carried these advantages further, with an added gain in accuracy.

We will never know the earliest dates for the controlled use of fire for warmth and cooking: in the open, any relevant evidence was removed by many subsequent events, and in occupied caves it was destroyed by generations of later use. The earliest date for a well-attested use of controlled fire has been receding: Goudsblom (1992) put it at about 250,000 years ago; and a dozen years later Goren-Inbar and co-workers (2004) pushed it as far back as 790,000 years ago, while the fossil record suggests that the consumption of some cooked food took place as early as 1.9 million years ago. But without any doubt, by the Upper Paleolithic—30,000 to 20,000 years ago, when Homo sapiens sapiens displaced Europe’s Neanderthals—the use of fire was widespread (Bar-Yosef 2002; Karkanas et al. 2007).

Cooking has been always seen as an important component of human evolution, but Wrangham (2009) believes that it had a “monstrous” effect on our ancestors because it greatly expanded the range and quality of available food, and also because its adoption brought many physical changes (including smaller teeth and a less voluminous digestive tract) and behavioral adjustments (such as the need to defend stores of accumulated food, which promoted protective female-male bonds) that led eventually to complex socialization, sedentary lives and “self-domestication.” All prehistoric cooking was done with open fires, with meat suspended above the flames, buried in hot embers, placed on hot rocks, encased in a tough skin, covered by clay, or put with hot stones into leather pouches filled with water. Owing to the variety of settings and methods, it is impossible to quote typical fuel conversion efficiencies. Experiments show that 2–10% of wood’s energy ends up as useful heat for cooking, and plausible assumptions indicate annual wood consumption maxima of 100–150 kg/year/capita (box 2.1).

Box 2.1 Wood consumption in open-fire cooking of meat

Realistic assumptions for setting plausible maxima of wood consumption in open-fire meat cooking during the late Paleolithic are as follows (Smil 2013a): average daily food energy intake of 10 MJ/capita (adequate for adults, higher than the mean for entire populations), with meat being 80% (8 MJ) of the total food intake; a food energy density of animal carcasses of 8–10 MJ/kg (typical for mammoths, generally 5–6 MJ/kg for large ungulates); an average ambient temperature of 20°C in warm and a mean of 10°C in colder climates; cooked meat at 80°C (77°C suffices for well-done meat); a heat capacity of meat of about 3 kJ/kg°C; cooking efficiency of an open fire at just 5%; and an average energy density of air-dried wood of 15 MJ/kg. These assumptions imply an average daily per capita intake of nearly 1 kg of mammoth meat (and about 1.5 kg of large ungulate meat) and a daily need for about 4–6 MJ of wood. The annual total would be 1.5–2.2 GJ or 100–150 kg of (some fresh and some air-dried) wood. For 200,000 people who lived 20,000 years ago the global need would be 20,000–30,000 t, a negligible share (on the order of 10^–8^) of the standing pre-agricultural woody phytomass.

In addition to warming and cooking, fire was also used as an engineering tool: modern humans were heat-treating stones to improve their flaking properties as early as 164,000 years ago (Brown et al. 2009). And Mellars (2006) suggested there is evidence for the controlled burning of vegetation in South Africa as early as 55,000 years ago. Woodland burning as a tool of environmental management by foragers during the early Holocene would have been done to aid hunting (by promoting the regrowth of forage to attract animals and improving visibility), to make human mobility easier, or to improve or synchronize the gathering of plant foods (Mason 2000).

The great spatial and temporal variability of the archaeological record precludes making any simple generalizations concerning the energy balances of prehistoric societies. Descriptions of first contacts with surviving foragers and their anthropological studies provide uncertain analogies: information on groups that survived in extreme environments long enough to be studied by modern scientific methods offers a limited insight into the lives of prehistoric foragers in more equable climates and more fertile areas. Moreover, many studied foraging societies were already affected by prolonged contact with pastoralists, farmers, or overseas migrants (Headland and Reid 1989; Fitzhugh and Habu 2002). But the absence of a typical foraging pattern does not preclude recognition of a number of biophysical imperatives governing energy flows and determining the behavior of gathering and hunting groups.

Foraging Societies

The most comprehensive collections of reliable evidence show that the average population densities of modern foraging populations—reflecting a variety of natural habitats and food acquisition skills and techniques—ranged over three orders of magnitude (Murdock 1967; Kelly 1983; Lee and Daily 1999; Marlowe 2005). The minima were less than a single person/100 km^2^ to several hundred people/100 km^2^, with the global mean of 25 people/100 km^2^ for 340 studied cultures, too low to support more complex societies with increasing functional specialization and social stratification. The average densities of foragers were lower than the densities of similarly massive herbivorous mammals that were able to digest abundant cellulosic phytomass.

Whereas allometric equations predict about five 50 kg mammals/km^2^, chimpanzee densities are between 1.3 and 2.4 animals/km^2^, and the densities of hunter-gatherers surviving into the twentieth century were well below one person/km^2^ in warm climates, only 0.24 in the Old World and 0.4 in the New World (Marlowe 2005; Smil 2013a). Population densities were significantly higher for groups combining the gathering of abundant plants with hunting (well-studied examples include groups in postglacial Europe and, more recently, in the basin of Mexico) and for coastal societies heavily dependent on aquatic species (with well-documented archaeological sites in the Baltic region and more recent anthropological studies in the Pacific Northwest).

Mollusk collecting, fishing, and near-shore hunting of sea mammals sustained the highest foraging densities and led to semipermanent, even permanent, settlements. The coastal villages of the Pacific Northwest, with their large houses and organized communal hunting of sea mammals, were exceptional in their sedentism. These large density variations were not a simple function of biospheric energy flows: they were not uniformly decreasing poleward and increasing equatorward (in proportion to higher photosynthetic productivity), or corresponding to the total mass of animals available for hunting. They were determined by ecosystemic variables, by a relative dependence on plant and animal foods, and by the use of seasonal storage. Much like nonhuman primates, all foragers were omnivorous, but killing larger animals was a major energetic challenge as it targeted a much smaller reservoir of edibles than plant gathering, a natural consequence of the diminishing energy transfer between trophic levels.

Herbivores consume only 1–2% of the net primary productivity in deciduous temperate forests and up to 50–60% in some tropical grasslands, with 5–10% most representative of terrestrial grazing (Smil 2013a). Generally less than 30% of the ingested phytomass is digested; most of it is respired, and in mammals and birds only 1–2% of it is converted into zoomass. As a result, the most commonly hunted herbivores embodied less than 1% of the energy initially stored in the phytomass of the ecosystems they inhabited. This reality explains why hunters preferred to kill animals that combined a relatively large adult body mass with high productivity and high territorial density: wild pigs (90 kg) and deer and antelopes (mostly 25–500 kg) were common targets.

Where such animals were relatively common, such as on tropical or temperate grasslands or in tropical woodlands, hunting was more rewarding, but, contrary to the common perception of an abundance of animal species, tropical forests were an inferior ecosystem to be exploited by hunting. Most tropical forest animals are small arboreal folivorous and fructivorous species (monkeys, birds) that are active and inaccessible in high tree canopies (many are also nocturnal), and hunting them yields low energy returns. Sillitoe (2002) found that both gathering and hunting in a tropical rain forest of the Papua New Guinea highlands to be costly, with foragers expending up to four times more energy on hunting than they obtained in food. Obviously, such a poor energy return would not allow hunting to be a primary means of food provision (the negative energy return could be explained only by the capture of animal protein), and some forms of shifting farming were required to provide enough food.

Bailey and co-workers (1989) concluded that there were no unambiguous ethnographic accounts of foragers who lived in tropical rain forests without some reliance on domesticated plants and animals. Bailey and Headland (1991) later changed that conclusion as archaeological evidence from Malaysia indicated that high densities of sago and pigs would allow exceptions. Similarly, gathering was often surprisingly unrewarding in the species-rich tropics, as well as in temperate forests. These ecosystems store most of the planet’s phytomass, but they do so mostly in the dead tissues of tall tree trunks, whose cellulose and lignin humans cannot digest (Smil 2013a). Energy-rich fruits and seeds are a very small portion of total plant mass and are often inaccessible in high canopies; seeds are often protected by hard coats and need energy-intensive processing before consumption. Gathering in tropical forests also needed more searching: a great variety of species means there may be considerable distances between the trees or vines whose parts are ready for collection ([]{#c10752_002.xhtml#fig_002a}fig. 2.2). The harvesting of Brazil nuts is a perfect example of these constraints (box 2.2).

![[]{#c10752_002.xhtml#fig_002}[[Figure 2.2](#c10752_002.xhtml#fig_002a)]{.figureLabel} Tropical rain forests are rich in species but relatively poor in plants that would support larger foraging populations. This image shows canopies at La Fortuna, Costa Rica (Corbis).](https://raw.githubusercontent.com/hdgroup/picasso/main/2023/energy-and-civilization/10752_002_fig_002.jpg)

Box 2.2 Harvesting Brazil nuts

Because of their high lipid content (66%), Brazil nuts contain about 27 MJ/kg (compared to about 15 MJ/kg for cereal grains), are about 14% protein, and are also a source of potassium, magnesium, calcium, phosphorus, and high levels of selenium (Nutrition Value 2015). Harvesting the nuts is both demanding and dangerous. Bertholletia excelsa grows up to 50 m, with individual trees widely scattered. Between 8 and 24 nuts are contained in heavy (up to 2 kg) capsules covered with a coconut-like hard endocarp. Nut foragers must time their harvest: too early, and the pods are still inaccessible in the canopies and gatherers must waste energy on another trip; too late, and agoutis (Dasyprocta punctata), which are large rodents and the only animals able to open the fallen pods, will eat the seeds right away or bury some of them in food caches (Haugaasen et al. 2010).

In contrast to the often frustrating hunting in tropical and boreal forests, grasslands and open woodlands offered excellent opportunities for collecting and hunting. They store much less energy per unit area than a dense forest, but a higher share of it comes in the form of easily collectible and highly nutritious seeds and fruits, or as concentrated patches of large starchy roots and tubers. High energy density (as much as 25 MJ/kg) made nuts particular favorites, and some of them, such as acorns and chestnuts, were also easy to harvest. And unlike in forests, many animals grazing on grasslands can grow to very large sizes, often move in massive herds, and give excellent returns on energy invested in the hunt.

And hominins could secure meat on grasslands and woodlands even without any weapons, as scavengers, as unmatched runners, or as clever schemers. In light of the unimpressive physical endowment of early humans and the absence of effective weapons, it is most likely that our ancestors were initially much better scavengers than hunters (Blumenschine and Cavallo 1992; Pobiner 2015). Large predators—lions, leopards, saber-toothed cats—often left behind partially eaten herbivore carcasses. This meat, or at least the nutritious bone marrow, could be reached by alert early humans before it was devoured by vultures, hyenas, and other scavengers. But Domínguez-Rodrigo (2002) has argued that scavenging would not provide enough meat and that only hunting could secure sufficient animal protein on grasslands. In any case, human bipedalism and ability to sweat better than any other mammal made it also possible to chase to exhaustion even the fastest herbivores (box 2.3).

Box 2.3 Running and heat dissipation by humans

All quadrupeds have optimum speeds for different gaits, such as walk, trot, and canter in horses. The energy cost of human running is relatively high compared to the running cost of similarly massive mammals, but unlike them, humans can uncouple that cost from running speed for common velocities between 2 and 6 m/s (Carrier 1984; Bramble and Lieberman 2004). Bipedalism and efficient heat dissipation explain this feat. Quadrupedal ventilation is limited to one breath per locomotor cycle. The thoracic bones and muscles must absorb the impact on the front limbs as the dorsoventral binding rhythmically compresses and expands the thorax, but human breath frequency can vary relative to stride frequency: humans can run at a variety of speeds, whereas optimal quadruped speed is structurally determined.

The extraordinary human ability to thermoregulate rests on very high rates of sweating. Horses lose water at an hourly rate of 100 g/m^2^ of their skin, and camels lose up to 250 g/m^2^, but people lose more than 500 g/m^2^, with peak rates of more than 2 kg/hour (Torii 1995; Taylor and Machado-Moreira 2013). Perspiration rate translates to heat loss of 550–625 W, enough to regulate temperature even during extremely hard work. People can also drink less than they perspire, and make up for any temporary partial dehydration hours later. Running turned humans into diurnal, high-temperature predators that could chase animals to exhaustion (Heinrich 2001; Liebenberg 2006). Documented chases include those of the Tarahumara Indians of northern Mexico running down deer and of Paiutes and Navajos besting pronghorn antelopes. The Kalahari Basarwa could chase duikers, gemsbok, and, during the dry season, even zebras to exhaustion, as some Australian Aborigines did with kangaroos. Hunters running barefoot reduced their energy costs by about 4% (and had fewer acute ankle and chronic lower leg injuries) than modern runners with athletic shoes (Warburton 2001).

Carrier (1984) believes that the outstanding rates of human heat dissipation provided a notable evolutionary advantage that served our ancestors well in appropriating a new niche, that of diurnal, hot-temperature predators. The human ability to sweat profusely and hence to work hard in hot environments was retained by populations that migrated to colder climates: there are no major differences in the density of eccrine glands among populations of different climate zones (Taylor 2006). People from middle and high latitudes can match the sweating rates of hot-climate natives after a short period of acclimatization.

But once adequate tools were invented and adopted, hunting with them was preferable to running down the prey, and Faith (2007) confirmed, after examining 51 assemblages from the Middle Stone Age and 98 from the Later Stone Age, that early African hunters were fully competent at killing large ungulate animals, including buffaloes. The energy imperatives of hunting large animals had also made an incalculable contribution to human socialization. Trinkaus (1987, 131–132) concluded that “most of the distinguishing human characteristics, such as bipedalism, manual dexterity, and elaborate technology, and marked encephalization can be viewed as having been promoted by the demands of an opportunistic foraging system.”

Hunting’s role in the evolution of human societies is self-evident. Individual success in hunting large animals with primitive weapons was unacceptably low, and viable hunting groups had to maintain minimum cooperative sizes in order to track wounded animals, butcher them, transport their meat, and then pool the gains. Communal hunting brought by far the greatest rewards, with well-planned and well-executed herding of animals into confined runs (using brush and stone drive lines, wooden fences, or ramps) and capturing them in prepared pens or natural traps, or—perhaps the simplest and most ingenious solution—stampeding them over cliffs (Frison 1987). Many large herbivores—mammoths, bison, deer, antelopes, mountain sheep—could be slaughtered in such ways, providing caches of frozen or processed (smoked, pemmican) meat.

Head-Smashed-In Buffalo Jump near Fort Mcleod, Alberta, a UNESCO World Heritage Site, is one of the more spectacular sites of this inventive hunting strategy, which was used at the site for about 5,700 years. “To start the hunt … young men … would entice the herd to follow them by imitating the bleating of a lost calf. As the buffalo moved closer to the drive lanes (long lines of stone cairns were built to help the hunters direct the buffalo to the cliff kill site), the hunters would circle behind and upwind of the herd and scare the animals by shouting and waving robes” and stampeded the herd over the cliff (UNESCO 2015a). The net energy return in animal protein and fat was high. Late Pleistocene hunters may have become so skillful that many students of the Quaternary era concluded that hunting was largely (even completely) responsible for a relatively rapid disappearance of the late Paleolithic megafauna, animals with a body mass greater than 50 kg (Martin 1958, 2005; Fiedel and Haynes 2004), but the verdict remains uncertain (box 2.4).

Box 2.4 Extinction of the Late Pleistocene megafauna

The persistent killing of slow-breeding animals (those with a single offspring born after a long gestation) could lead to their extinction. If we assume that Late Pleistocene foragers had a high daily food requirement of 10 MJ/capita, that they ate mostly meat, and that most of it (80%) came from megafauna, then their population of two million people would need nearly 2 Mt (fresh weight) of meat (Smil 2013a). If mammoths were the only hunted species, that would have required the annual killing of 250,000–400,000 animals. Megaherbivore hunting also targeted other large mammals (elephants, giant deer, bison, aurochs), and procuring 2 Mt of meat from a mixture of these species would have required an annual kill of some two million animals. A more likely explanation for the Late Pleistocene extinctions is a combination of natural (climate and vegetation change) and anthropogenic (hunting and fire) factors (Smil 2013a).

All pre-agricultural societies were omnivorous; they did not have the luxury of ignoring any available food resource. Although foragers ate a large variety of plant and animal species, usually only a few principal foodstuffs dominated their diets. A preference for seeds among gatherers was inevitable. Besides being rather easy to collect and store, seeds combine a high energy content with relatively high protein shares. Wild grass seeds have as much food energy as cultivated grains (wheat is at 15 MJ/kg), while nuts have an energy density up to about 80% higher (walnuts contain 27.4 MJ/kg).

All wild meat is an excellent source of protein, but most of it contains very little fat, and hence it has very low energy density—less than half that of grains for small, lean mammals. Not surprisingly, there was a widespread hunting preference for large and relatively fatty species. A single small mammoth provided as much edible energy as 50 reindeer, while a bison was easily equal to 20 deer (box 2.5). This is why our Neolithic ancestors were willing to ambush huge mammoths with their simple stone-tipped weapons, or why Indians on the North American plains, seeking fatty meat for preparing long-lasting pemmican, spent so much energy in pursuing bison.

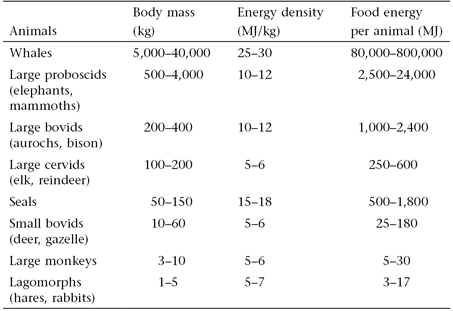

Box 2.5 Body masses, energy densities, and food energy content of hunted animals

But energy considerations alone cannot provide a full explanation of foraging behavior. If they were always dominant, then optimal foraging—whereby gatherers and hunters try to maximize their net energy gain by minimizing the time and effort spent in foraging—would have been their universal strategy (Bettinger 1991). Optimal foraging explains the preference for hunting large, fatty mammals or for collecting less nutritious plant parts that do not need processing rather than energy-dense nuts, which may be hard to crack. Many foragers undoubtedly behaved in ways that maximized their net energy return, but other existential imperatives often worked against such behavior. Among the most important ones were the availability of safe night shelters, the need to defend territories against competing groups, and the needs for reliable water sources and for vitamins and minerals. Food preferences and attitudes toward work were also important (box 2.6).

Box 2.6 Food preferences and attitudes toward work